HOTLINE

HOTLINE

Daniel Snider, Fanny Chevalier, Gennady Pekhimenko

University of Toronto

In Proceedings of Machine Learning and Systems (MLSys 2023)

Abstract

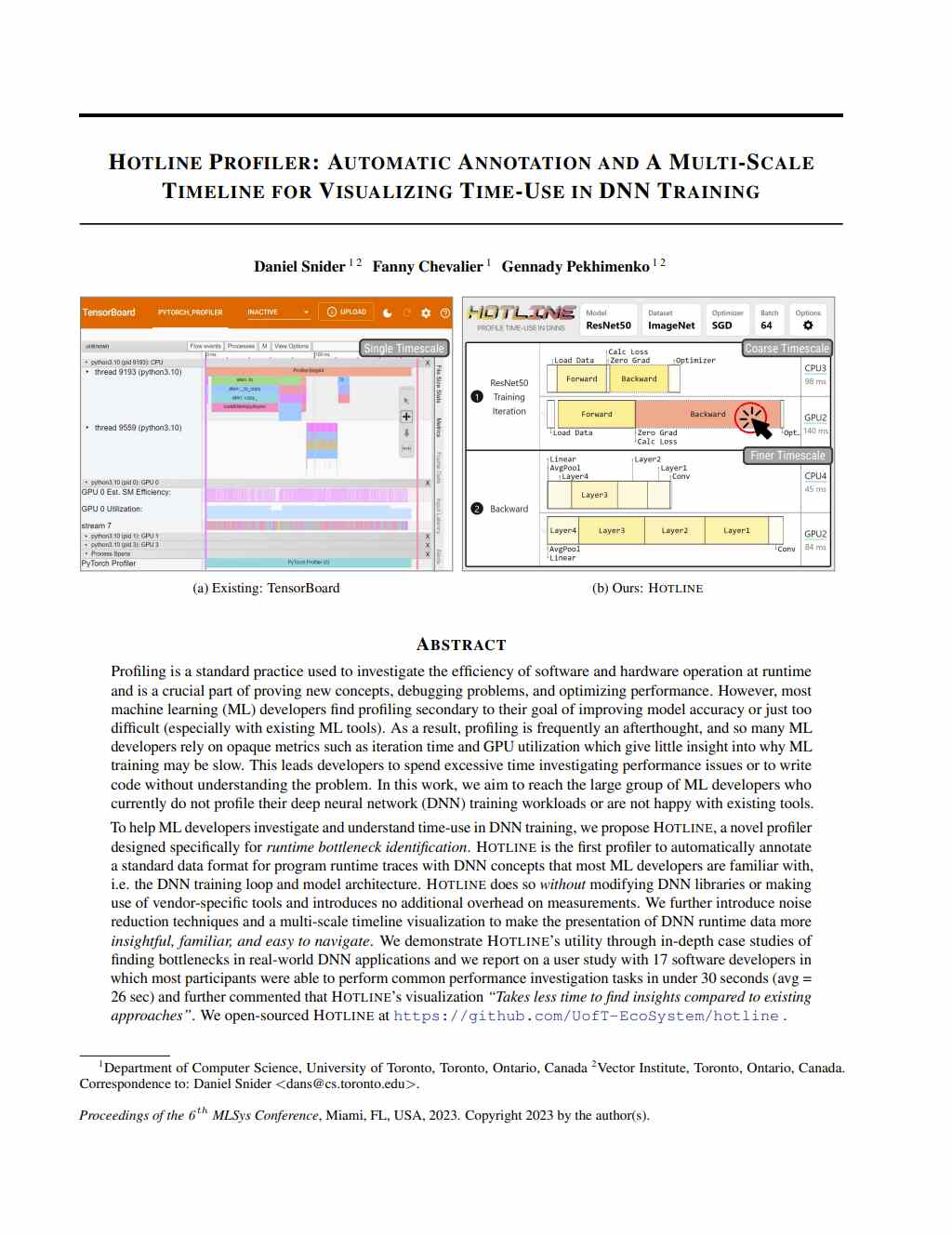

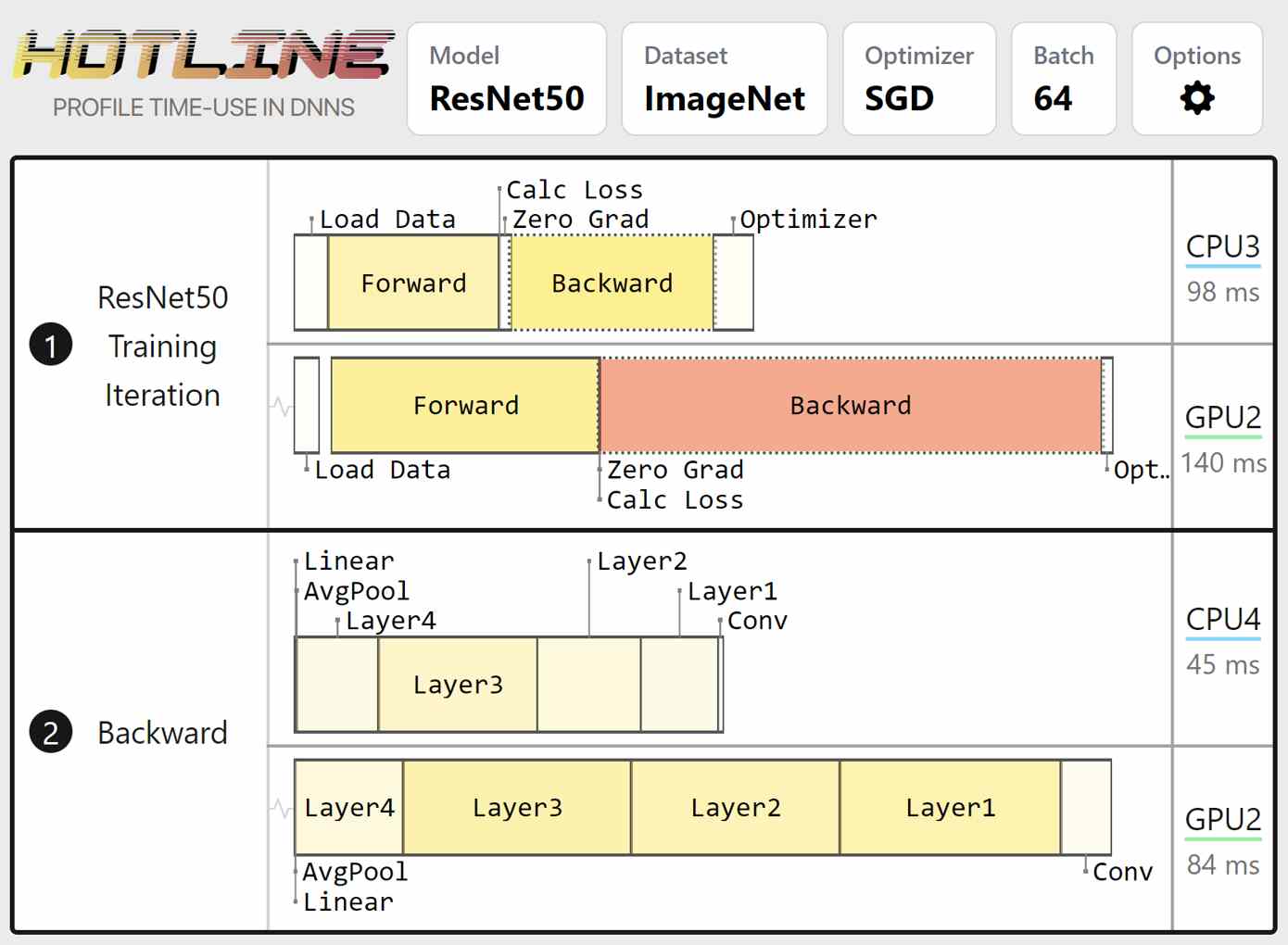

To help ML developers investigate and understand time-use in DNN training, we propose Hotline, a novel profiler designed specifically for runtime bottleneck identification. Hotline is the first profiler to automatically annotate a standard data format for program runtime traces with DNN concepts that most ML developers are familiar with, i.e. the DNN training loop and model architecture. Hotline does so without modifying DNN libraries or making use of vendor-specific tools and introduces no additional overhead on measurements. We further introduce noise reduction techniques and a multi-scale timeline visualization to make the presentation of DNN runtime data more insightful, familiar, and easy to navigate. We demonstrate Hotline’s utility through in-depth case studies of finding bottlenecks in real-world DNN applications and we report on a user study with 17 software developers in which most participants were able to perform common performance investigation tasks in under 30 seconds (avg = 26 sec) and further commented that Hotline’s visualization “Takes less time to find insights compared to existing approaches”.

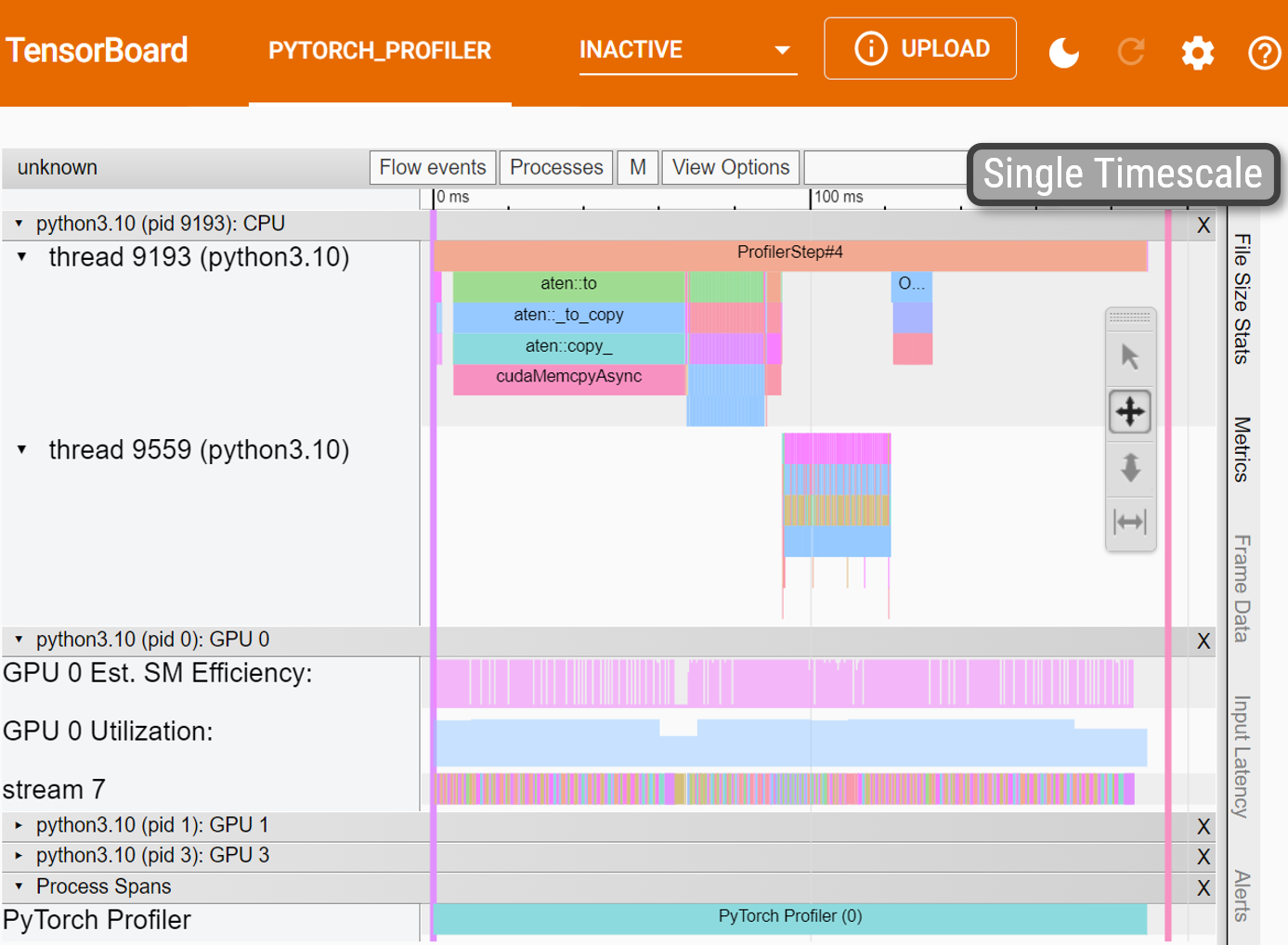

Existing:

Tensorboard

Ours:

Hotline

Motivation

Profiling is a standard practice used to investigate the efficiency of software and hardware operation at runtime and is a crucial part of proving new concepts, debugging problems, and optimizing performance. However, most machine learning (ML) developers find profiling secondary to their goal of improving model accuracy or just too difficult (especially with existing ML tools).

Why is profiling important?

- Why 1: Find Bottlenecks

- Why 2: Communicate Findings

- Why 3: Guide Optimization

Why is profiling hard?

- Challenge 1: Information Overload

- Challenge 2: Disparate Granularities

- Challenge 3: Discontiguous Execution

What can be done to improve?

- Goal 1: Easy to Navigate

- Goal 2: Reduce Noise

- Goal 3: More Familiar

Contributions

1) Automatic Annotation of DNN Concepts

Reconciling operations defined in DNN models to operations in DNN runtime traces is non-trivial and tedious to do manually so we have developed an automatic algorithm. Hotline generates three types of annotations that existing profilers do not offer: stages of training, model architecture, and arbitrary but interesting sections not defined in the user’s source code, such as inter-GPU communication.

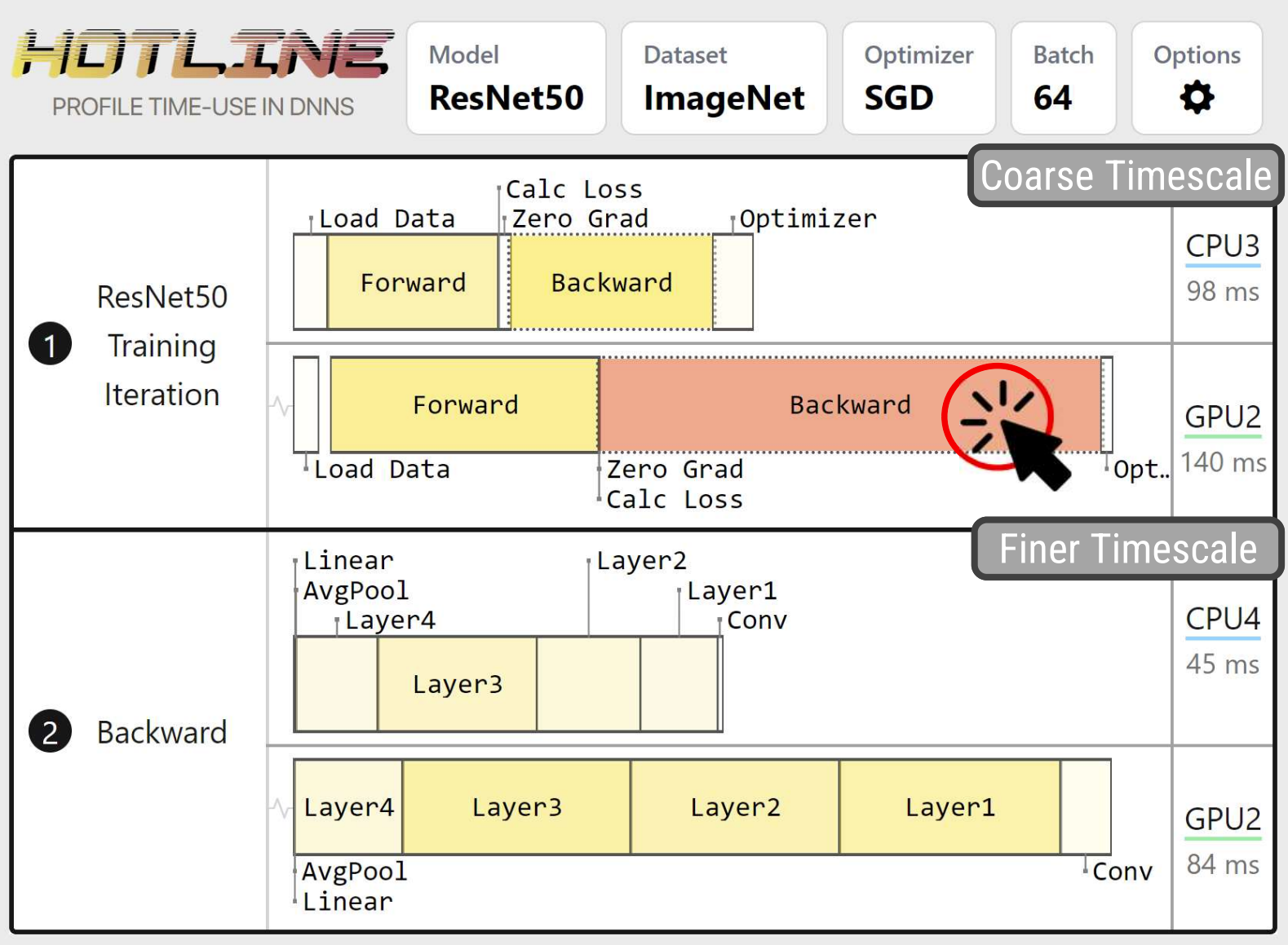

2) Novel Multi-Scale Timeline

We introduce the Multi-Scale Timeline and show it is a promising visualization technique that is well suited for navigating and interpreting the complex timelines found in DNN training and which may have broad utility in the field of systems profiling.

3) Evaluation of Utility

We present in-depth case studies illustrating Hotline’s unique advantages when used to investigate time-use bottlenecks. Additionally, we report on software developers’ qualitative impressions of Hotline’s design and show how rapidly common performance investigation tasks can be performed.

Citation

@article{2023Hotline,

title={Hotline Profiler: Automatic Annotation and A Multi-Scale Timeline for Visualizing Time-Use in DNN Training},

author={Snider, Daniel and Chevalier, Fanny and Pekhimenko, Gennady},

journal={Conference on Machine Learning and Systems (MLSys)},

year={2023}

}Acknowledgements: This project was supported by the Canada Foundation for Innovation JELF grant, NSERC Discovery grant, AWS Machine Learning Research Award (MLRA), Facebook Faculty Research Award, Google Scholar Research Award, and VMware Early Career Faculty Grant.